What Exactly Is a Data Pipeline?

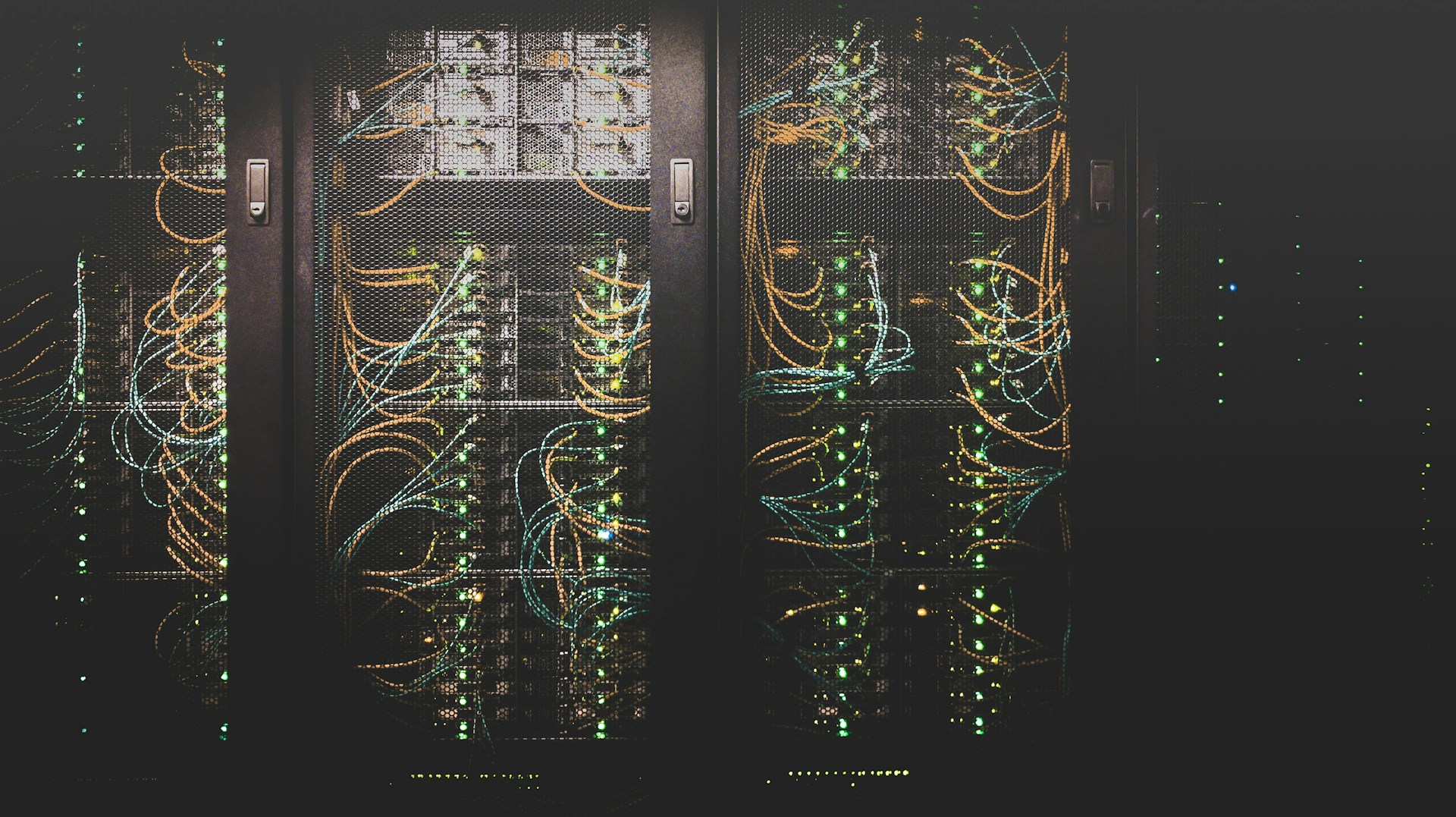

In today’s data-driven world, organizations rely on data to make informed decisions, drive innovation, and stay competitive. Raw data is often messy, scattered across various sources, and not immediately usable. This is where data pipelines come into play. But what exactly is a data pipeline? Let’s break it down.

Definition of a Data Pipeline

A data pipeline is a series of processes that automate the movement and transformation of data from one system to another. Think of it as a pathway that raw data travels through to become valuable insights. The pipeline’s primary goal is to ensure data is collected, processed, and delivered reliably and efficiently.

A data pipeline typically involves three main stages:

- Ingestion: Capturing raw data from various sources such as databases, APIs, sensors, or user inputs.

- Processing: Cleaning, transforming, and enriching the data to make it usable. This may involve filtering, aggregating, or even applying machine learning models.

- Storage and Output: Delivering the processed data to a destination like a database, data warehouse, or visualization tool for analysis.

Key Components of a Data Pipeline

Building a data pipeline requires multiple components that work together seamlessly. These include:

- Data Sources: The origin of raw data, which can include operational databases, external APIs, log files, or IoT devices.

- Extract, Transform, Load (ETL) or Extract, Load, Transform (ELT):

- Extract: Pulling data from source systems.

- Transform: Cleaning and modifying the data into the desired format.

- Load: Moving the processed data into its destination.

- Workflow Orchestration: Tools like Apache Airflow or Prefect manage the scheduling and monitoring of pipeline tasks.

- Data Processing Frameworks: Frameworks like Apache Spark or Flink handle the heavy lifting of data transformation and computation.

- Storage Systems: Data is often stored in cloud platforms, databases, or data lakes such as Amazon S3, Snowflake, or BigQuery.

Types of Data Pipelines

Data pipelines come in various forms depending on the needs of an organization:

- Batch Pipelines: Process data in chunks at scheduled intervals (e.g., daily sales reports).

- Real-Time Pipelines: Handle streaming data and provide instant processing (e.g., live stock prices).

- Hybrid Pipelines: Combine batch and real-time processing to accommodate diverse use cases.

Why Are Data Pipelines Important?

- Automation: Pipelines eliminate manual intervention, making data processing faster and less error-prone.

- Scalability: They can handle large volumes of data as businesses grow.

- Consistency: Pipelines ensure data is processed uniformly, maintaining data integrity.

- Actionable Insights: By organizing and processing data, pipelines enable better decision-making.

Challenges in Building Data Pipelines

Creating a robust data pipeline isn’t without its hurdles:

- Data Quality: Ensuring the input data is accurate and clean.

- System Compatibility: Integrating different tools and platforms.

- Performance: Maintaining low latency in real-time pipelines.

- Scalability: Designing for future growth.

Tools and Technologies for Data Pipelines

Numerous tools help build and manage data pipelines, each catering to specific needs:

- Ingestion Tools: Apache Kafka, AWS Kinesis, Google Cloud Pub/Sub.

- ETL Tools: Talend, Informatica, Matillion.

- Data Processing: Apache Spark, Flink, Google Dataflow.

- Workflow Orchestration: Apache Airflow, Luigi, Prefect.

- Visualization: Tableau, Power BI, Looker.

Real-World Example of a Data Pipeline

Imagine an e-commerce company that wants to analyze customer behavior:

- Ingestion: User interactions are captured via website logs and mobile app analytics.

- Processing: The raw data is cleaned to remove duplicates, then enriched with geolocation data to understand regional trends.

- Storage: Processed data is stored in a cloud data warehouse like Snowflake.

- Output: The marketing team visualizes the data in Tableau to create targeted campaigns.

Final Thoughts

A data pipeline is the backbone of modern data operations, bridging the gap between raw data and actionable insights. As businesses generate and rely on increasingly vast amounts of data, understanding and leveraging data pipelines is more critical than ever. Whether you’re a data engineer, analyst, or decision-maker, grasping the fundamentals of data pipelines empowers you to unlock the true potential of your data.

This video from IBM excels at explaining data pipelines. Check it out below and set up your Data Strategy Session with Dieseinerdata!